Analysis shows a hike in the number of malicious pop-ups that come from Check-tl-ver websites. It is a rather common strategy of aggressive marketing that aims to spam users after forcing them to allow sending notifications from the aforementioned websites. Let’s figure out what this scam is, and how to stop Check-tl-ver pop-ups.

What are check-tl-version pop-up notifications?

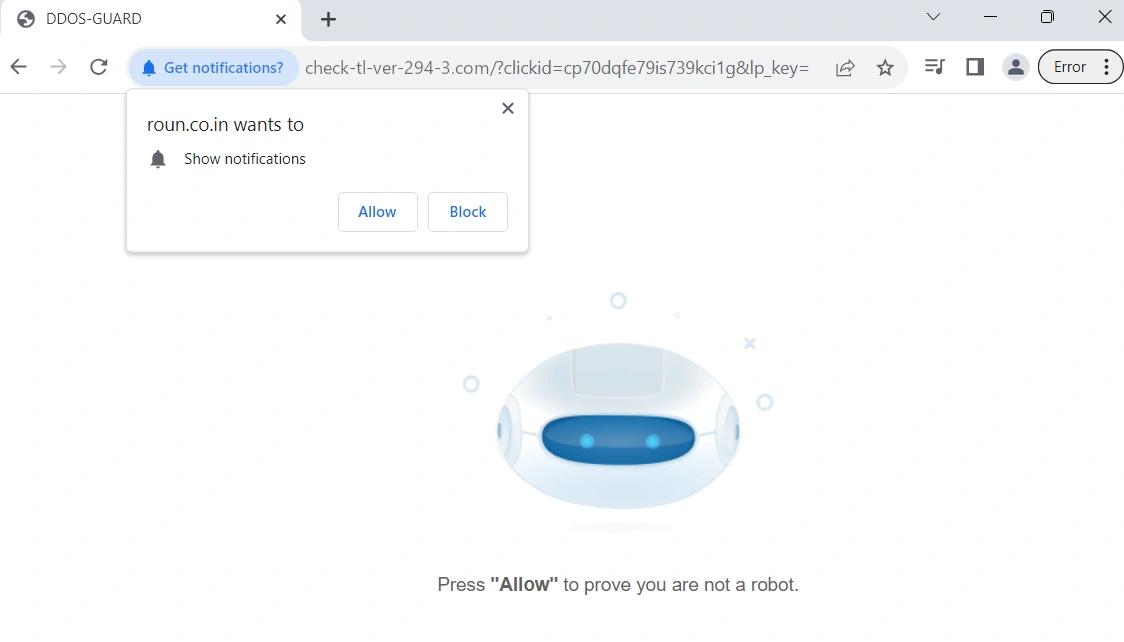

Pop-up notifications from Check-tl-version sites are a spam campaign that aims to earn money from pay-per-view and pay-per-click advertisements. There is an entire chain of such sites, created by the same group of cybercriminals and existing for the same purpose. Frauds who stand behind all this lure people into pressing the “Allow notifications” button that appears as soon as one enters the site. This demand may be framed as a form of captcha, DDoS protection, or the like.

List of domains involved in a scam

| URL | Registered | Scan report |

|---|---|---|

| Check-tl-ver-u99-a.buzz | 2024-10-09 | Report |

| Check-tl-ver-u99-b.buzz | 2024-10-09 | Report |

| Check-tl-ver-u99-c.buzz | 2024-10-09 | Report |

| Check-tl-ver-u99-d.buzz | 2024-10-09 | Report |

| Check-tl-ver-u99-e.buzz | 2024-10-09 | Report |

| Check-tl-ver-u99-f.buzz | 2024-10-09 | Report |

| Check-tl-ver-u99-g.buzz | 2024-10-09 | Report |

One particular source of the redirections to check-tl-version sites is by browsing sites with illegal or explicit content. Websites that host pirated movies or games, adult sites – clicking anything on such pages may trigger the redirection to the scam site that will ask you to allow notifications. That twisted form of cooperation is what makes me warn people against using such sources of software and movies.

Interesting thing about the pop-up spam sites is that they work only after the redirection. Simple checks show that opening the scam page requires a correct link. Visiting the root domain, without the additional parameters in the URL, will return either a 404 error or a boilerplate that says the URL is for sale.

How dangerous are Check-tl-version pop-ups?

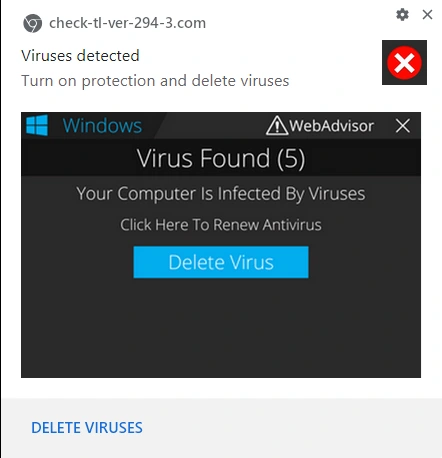

Once the user allows notifications from one of the check-tl-version websites, it starts bombarding them with pop-ups. These notifications appear in the system tray, offering gambling, adult sites, or trying to scare the user by saying the system is infected. Clicking on a pop-up will send the user to a website with some rather questionable content. It is also pretty common to see phishing pages promoting in such a way, which forms the main concern of having this pop-up spam.

Another angle of the problem is the offer to install some questionable software to solve non-existent problems. You might encounter a so-called Microsoft tech support scam page or a site that pretends to scan your PC, falsely reporting that there are hundreds of malicious programs running at the moment. To make it harder for the user to quit, scammers make these sites open in a full-screen mode, so there is no visible way out. Of course, unless someone presses the Escape button.

But scams and phishing aside, the key issue with all this is the fact that constant pop-ups are extremely annoying. Because of the way Windows shows notifications, they will appear on top of any app that is currently running. It’s simply hard to concentrate on your task when you constantly hear and see banners popping up one after another. And, well, it will be quite an embarrassing moment when your boss walks by while there is a pop-up with hot girls around you on the screen.

How to remove Check-tl-version pop-ups?

It is possible to remove the pop-up source manually, through the browser interface. For this, go to your browser settings, find notification settings and remove all the sites that are listed as ones that can send notifications. Reload the browser to apply the changes.

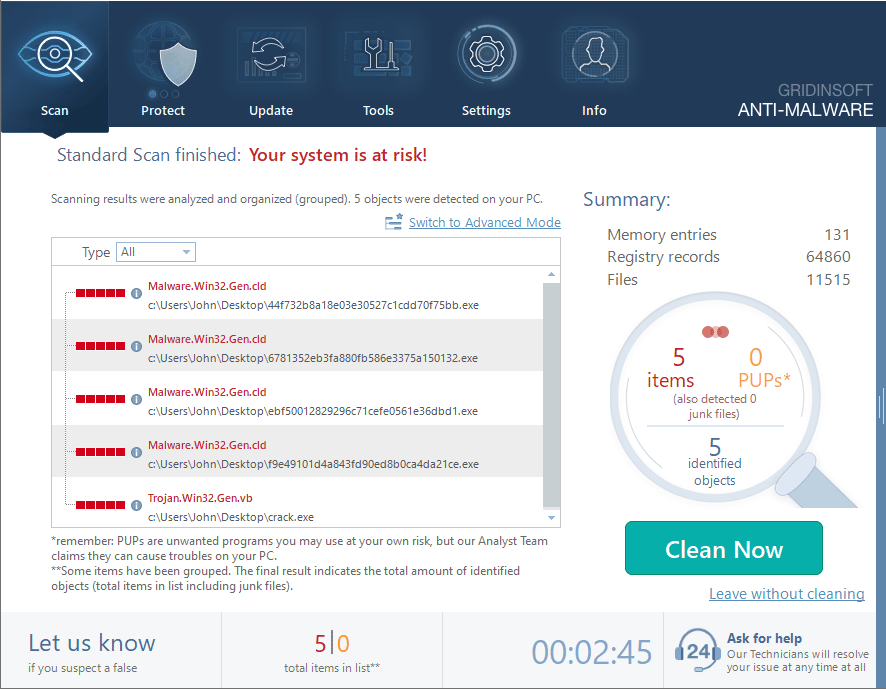

There is also the second step – malware removal. It is possible that the check-tl-version pop-ups appearance is caused by the activity of adware or browser hijackers. These two malware types often cause redirections, and may alter web browser settings to their needs. For that reason, I recommend scanning the system with GridinSoft Anti-Malware: it will clear whether there is something malicious on your device, or not. Download it, install and run a Standard scan: this will check the places where the said malware typically keeps its files.

Download and install Anti-Malware by clicking the button below. After the installation, run a Full scan: this will check all the volumes present in the system, including hidden folders and system files. Scanning will take around 15 minutes.

After the scan, you will see the list of detected malicious and unwanted elements. It is possible to adjust the actions that the antimalware program does to each element: click "Advanced mode" and see the options in the drop-down menus. You can also see extended information about each detection - malware type, effects and potential source of infection.

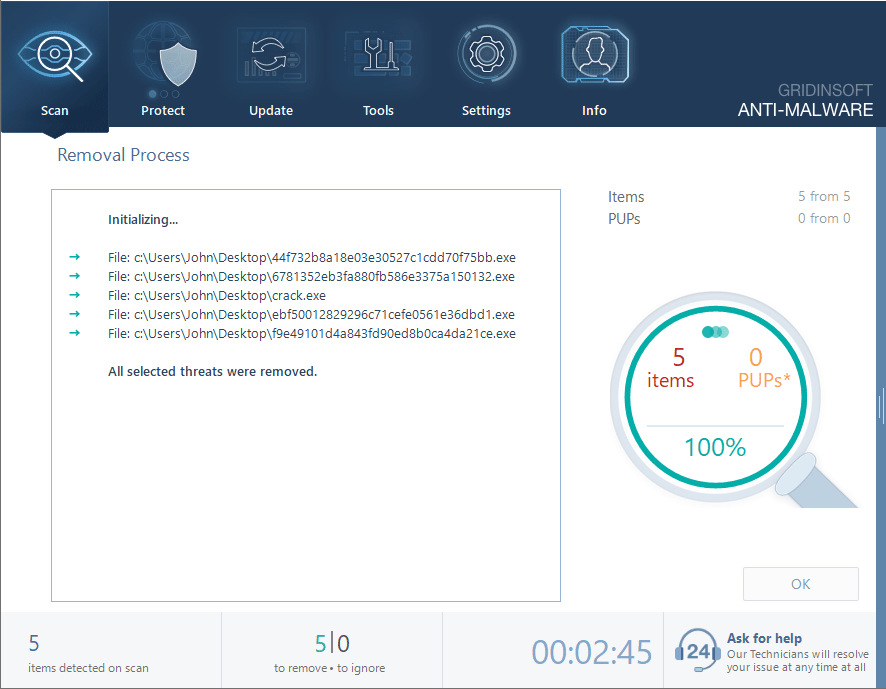

Click "Clean Now" to start the removal process. Important: removal process may take several minutes when there are a lot of detections. Do not interrupt this process, and you will get your system as clean as new.